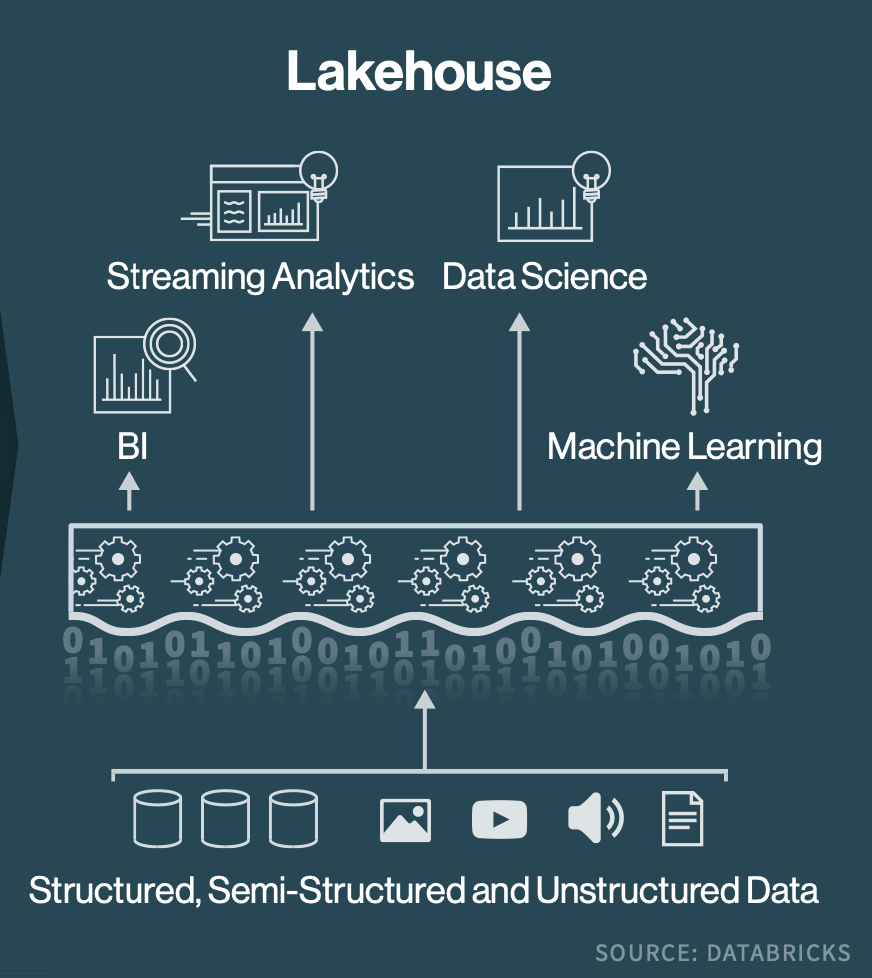

The data lakehouse concept was introduced early in 2020 by Databricks, a company founded in 2013 by the original creators of Apache Spark™, Delta Lake and MLflow. Data in a lake is therefore often inconsistent-the so-called data swamp-and it is up to the users to manage its meaning (schema on read) and-a more difficult proposition-quality. The solid blue feeds to the data lake denote minimum levels of transformation of the incoming data and are operated independently, often by data scientists or business departments. These functions are largely IT controlled. The black arrows into and out of the enterprise data warehouse (EDW) denote strong transformation function (schema on write) and are arranged in funnel patterns to imply consolidation of data in the EDW. The heavy black arrows show bulk data transfer between data stores, whereas the light blue dashed arrows show access to data stores from applications.

The different types of data stores-relational, flat file, graph, key-value, and so on-have different symbols, and it is clear that a data lake contains many different storage types, while the data warehouse is fully relational. Using an open and secure exchange technology helps to maximize the pool of potential exchange partners by removing the barriers of vendor technology lock-in.Different arrow and widget designs represent different types of function and storage. Security is critical, as is efficiency and instant access to the latest data. Increasingly, organizations need to share data sets, large and small, with their business units, customers, suppliers, and partners. It must be actively managed to improve the quality of the final data sets so that the data serves as reliable and trustworthy information for business users.ĭata sharing plays a key role in business processes across the enterprise, from product development and internal operations to customer experience and regulatory compliance. Data quality has many dimensions, including completeness, accuracy, validity, and consistency. Data access is centrally audited with alerting and monitoring capabilities to promote accountability.ĭata quality is fundamental to deriving accurate and meaningful insights from data. With a unified data security system, the permissions model can be centrally and consistently managed across all data assets. This information is critical for almost all compliance requirements for regulated industries and is fundamental to any security governance program.

There are two tenets of effective data security governance: understanding who has access to what data, and who has recently accessed what data. It enables end users to discover the data sets available to them and provides provenance visibility by tracking the lineage of all data assets. A unified catalog centrally and consistently stores all your data and analytical artifacts, as well as the metadata associated with each data object. It involves the collection, integration, organization, and persistence of trusted data assets to help organizations maximize their value. Data management is the foundation for executing the data governance strategy.

0 kommentar(er)

0 kommentar(er)